AI Security: The Next Frontier

Preventing hidden threats in generative AI applications and infrastructure

Security then and now: Why AI Security is Different

Traditional security platforms, such as data security posture management and cloud security posture management tools, protect an organization’s IT applications and infrastructure, through network and app protection, regulatory compliance, sensitive data protection, and access controls. However, LLMs and generative AI models have introduced new security challenges that previous platforms do not cover. These models are characterized by dynamic responses and user interactions, and can be accessed by their own APIs, adding a new layer of complexity to existing security issues. These models are continuously evolving and exhibit behavior that can also be unpredictable, because users can potentially manipulate them.

LLMs can access information from the internet, write and execute code, receive and produce multimodal data (e.g. text, video, images, and audio), and interact with databases and internal memory. They are being implemented for diverse enterprise use cases with different levels of access to data and IT controls, thus opening up more access points for attackers. Finally, the rise of autonomous AI agents also underscores the growing importance of security in this space. As many tasks are being automated with little human oversight, this requires more sophisticated techniques to respond to attacks on AI models quickly.

Source: World Economic Forum and Accenture, Global Cybersecurity Outlook 2024

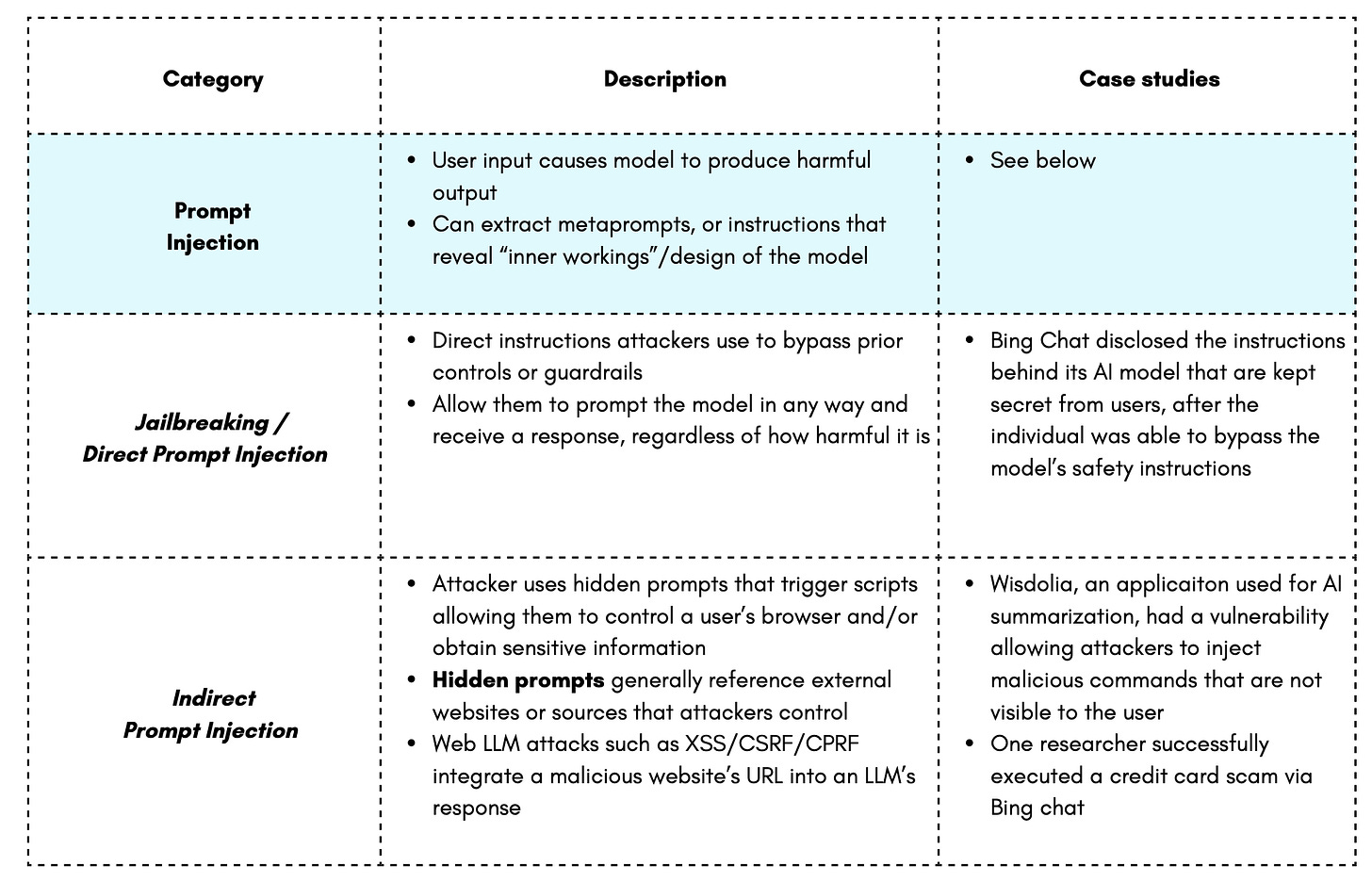

The ML Threat Landscape

There are a number of security risks that new generative models pose. Attackers can collect sensitive information, including PII, proprietary training data and models, confidential company and employee details, and credentials used for authentication and authorization procedures. This information can facilitate more effective scams and fraudulent activities, such as phishing and impersonation, spreading malware and malicious code, leading to long-term consequences. ML security threats also put backend IT systems at risk, potentially giving attackers complete control of generative models, APIs and other integrations, as well as an organization’s technology infrastructure and computing power.

Ultimately, such attacks can expose an organization’s proprietary models, data, confidential and critical information, and even client data, putting other organizations at risk as well.

See my previous article on AI infrastructure, in case you need a quick primer on the components of AI model development.

In white box attacks, the attacker has complete access to the model information and access privileges. However, most attackers are conducting black box attacks, when they have little to no information about how the system works. They can simply interact with the model as any normal user, through prompts and responses, but are able to exploit certain vulnerabilities and behaviors in order to conduct malicious activities. In the end, the interconnectedness of AI models, data sources, and applications offers many different points of access for bad actors.

Source: MITRE ATLAS - AI Security Overview

Overview of ML Security Threats

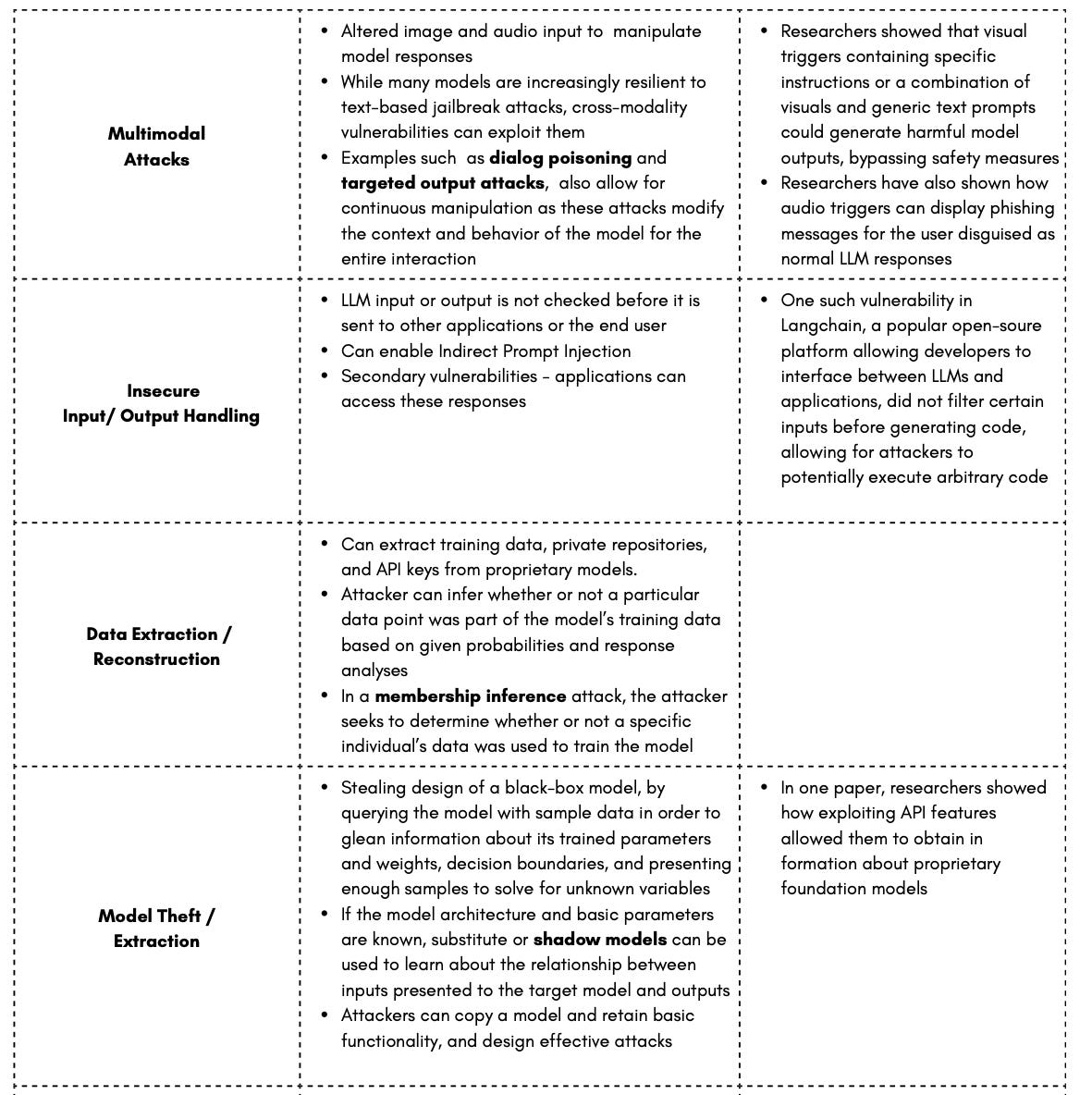

The next set of risks stem from vulnerabilities in third-party tools and practices in the model development stack:

These include more targeted attacks on the datasets and model beyond prompting and platform security:

The last set of threats are general problems, but also new present vulnerabilities and considerations from an ML security perspective.

Source: The Innovation Equation

The next post will dive into some potential and experimental solutions, the current landscape of ML security tools, and why this area still remains an open and evolving challenge.

Further reading:

References in table:

Jailbreaking/Direct Prompt Injection – Bing Chat Instructions

Indirect Prompt Injection: Wisdolia example, Bing Chat credit card scam

Supply chain attacks: Hugging Face stolen tokens, malicious models on HF

Data poisoning: Poisoned training datasets and Wikipedia example

Exploiting APIs and plugins: Cloudflare example

Infrastructure Vulnerabilities: Ray compute framework example

Insecure Input/Output Handling: Langchain example

Multimodal attacks: visual triggers to bypass guardrails, dialog poisoning and audio triggers

Model theft/extraction: model theft example, shadow model training

Package hallucinations: Pytorch example, Lasso security experiment

Shadow vulnerabilities: Samsung data leak example